The other day I wanted to get GitHub Copilot to write for me a boring method, an Update operation (the U in CRUD). I wasn't happy with the result at all so I decided to test with the various AI models that are available in Copilot chat. This article is a summary of the results.

The Setup

As I mentioned this is real code from a real project. I was looking at a trivial update of an entity in the database via a REST service. I knew from previous experience that I would not be happy if I tell Copilot to write the whole feature so I wrote the interface and the DTOs and only used the Copilot chat for the actual Update method. I already had very similar method in another class so I told Copilot to copy it and essentially act as find and replace. The software in question has multitenancy. The tenant is called Organization so organizationId in the code is the tenant id. The example code updates an Application an entity directly under the Organization and the new code should update a FarmPlot which is also an entity directly under the Organization. Here is the code to update an application:

public async ValueTask<ApplicationDto?> UpdateAsync(

int applicationId,

ApplicationEditDto applicationEditDto,

string currentUserId)

{

Application? application = await Context.Applications

.Include(a => a.Organization)

.SingleOrDefaultAsync(a => a.ApplicationId == applicationId

&& a.DeletedOn == null

&& a.Organization.DeletedOn == null);

if (application is null || !await CanReadAsync(application.OrganizationId, currentUserId))

{

return null;

}

await ThrowIfCannotEditAsync(application.OrganizationId, currentUserId);

applicationEditDto.UpdateEntity(application);

application.Name = application.Name.Trim();

await Context.SaveChangesAsync();

int devicesCount = await GetDevicesCountAsync(applicationId);

return application.ToDto(canEdit: true, devicesCount);

}

This is the final human-written code for the FarmPlot update:

public async ValueTask<FarmPlotDto?> UpdateAsync(

int farmPlotId,

FarmPlotEditDto farmPlotEditDto,

string currentUserId)

{

FarmPlot? farmPlot = await Context.FarmPlots

.Include(fp => fp.Organization)

.SingleOrDefaultAsync(fp => fp.FarmPlotId == farmPlotId

&& fp.DeletedOn == null

&& fp.Organization.DeletedOn == null);

if (farmPlot is null || !await CanReadAsync(farmPlot.OrganizationId, currentUserId))

{

return null;

}

await ThrowIfCannotEditAsync(farmPlot.OrganizationId, currentUserId);

if (!farmPlot.Organization.FarmEnabled)

{

throw new BusinessException("The farm functionality is not enabled for the specified organization");

}

farmPlotEditDto.UpdateEntity(farmPlot);

farmPlot.Name = farmPlot.Name.Trim();

await Context.SaveChangesAsync();

return farmPlot.ToDto(canEdit: true);

}

The code retrieves an entity from the database, checks if the user has a permission to see it and if not returns null (converted to 404 in the REST action) so that the user can't know if the id is valid. Then if the entity exists and the user can see it the code performs the check for the edit permission. From this point on there are minor differences that Copilot could have figured out from the Create method present in FarmService.cs but I was prepared to edit manually if needed. All methods called in this code already exist in the codebase either in the base class or as extension methods, I didn't expect Copilot to write any of them. I consider this to be the most trivial real world CRUD code. We can argue about things like should I use query filters to check the soft delete and so on but in general a real world CRUD code will have at least this level of complexity which honestly is not a lot. And then I got this

Results

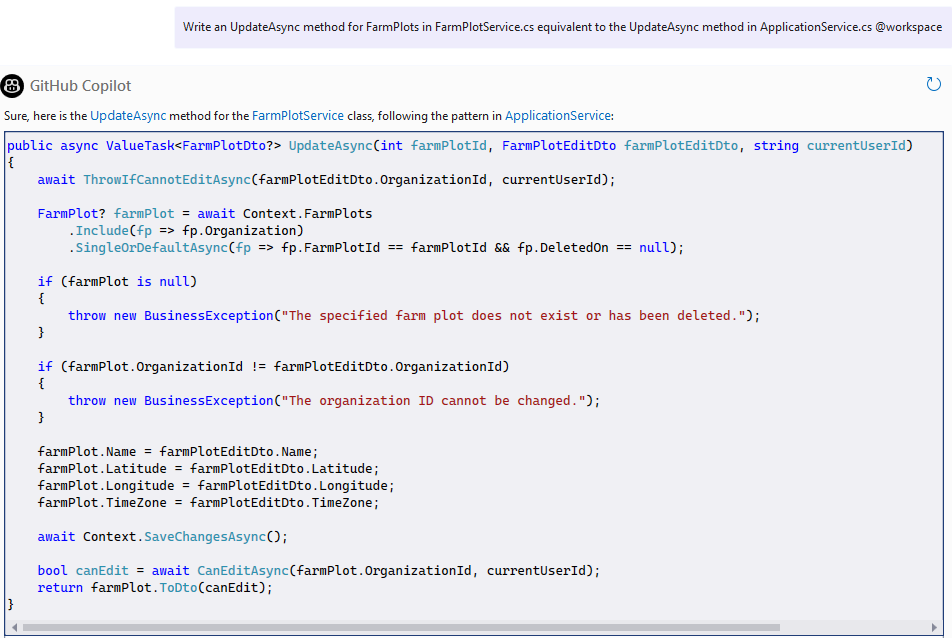

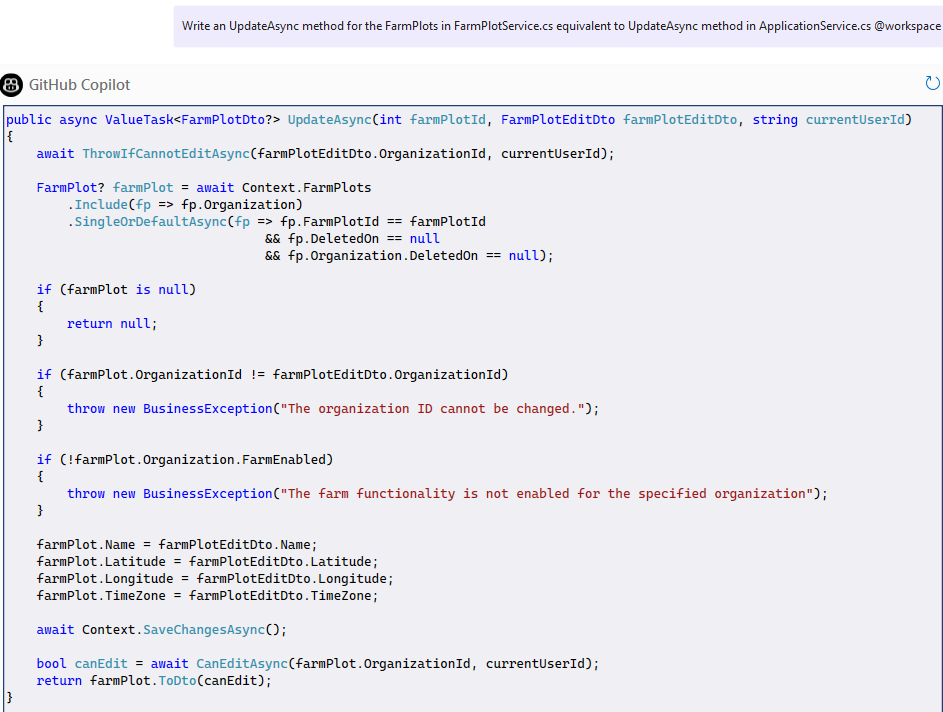

GPT-4o:

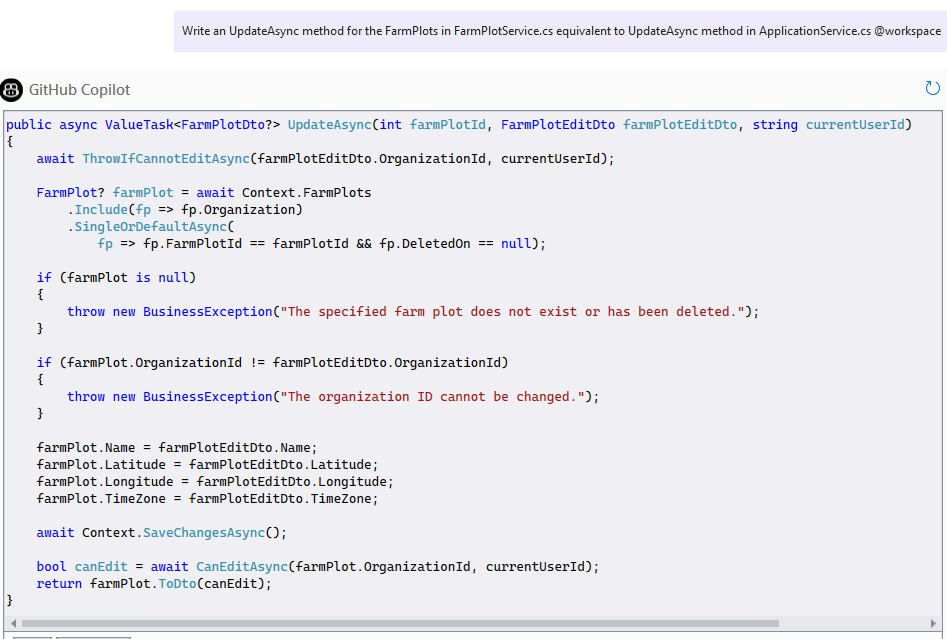

o1:

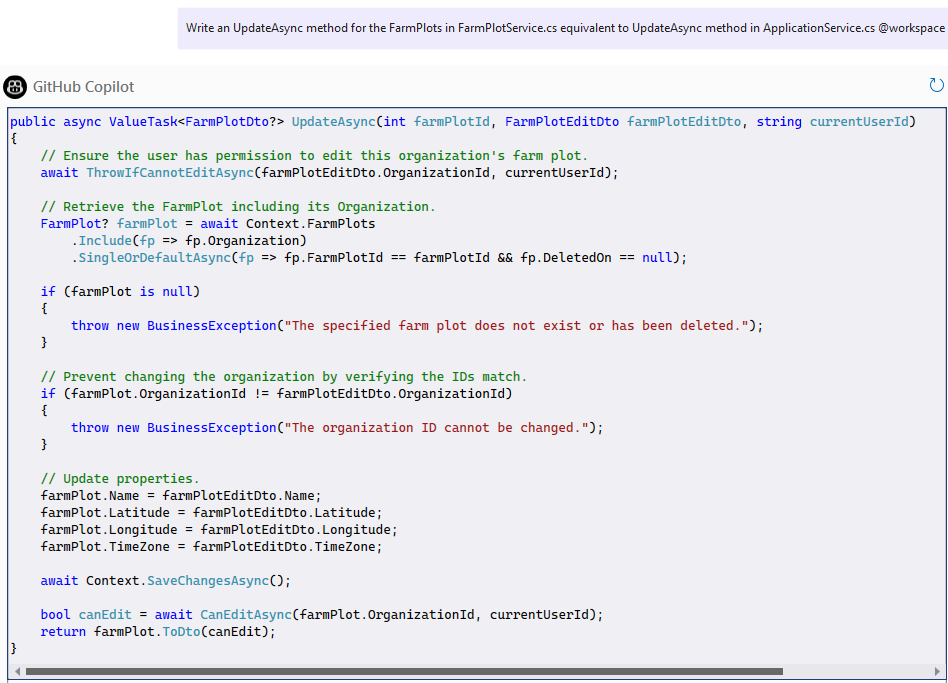

o3-mini:

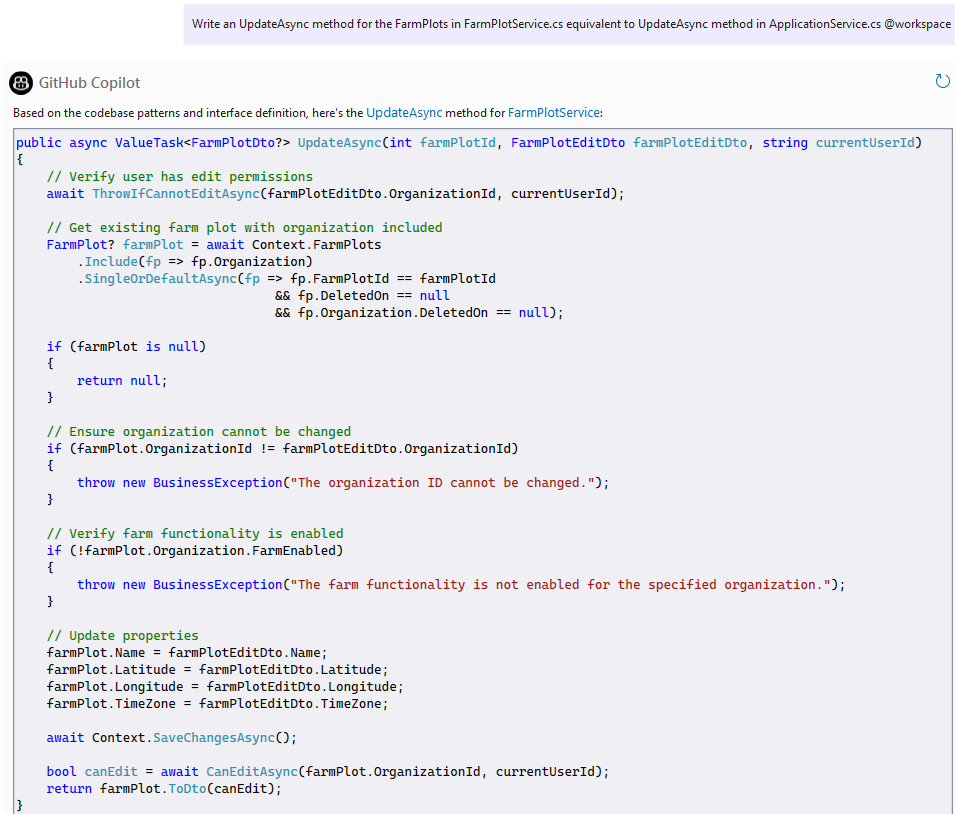

Claude 3.5 Sonnet:

Claude 3.7 Sonnet:

The prompt was:

"Write an UpdateAsync method for the FarmPlots in FarmPlotService.cs equivalent to UpdateAsync method in ApplicationService.cs @workspace"

As you can see all of them failed. For some reason all of them decided to move the edit permissions check to the top. I don't believe there is a single place in my codebase where a method starts with this check. They also decided to not use the existing mapper code in the UpdateEntity method. All of them hallucinated some OrganizationId check. While it is true that a FarmPlot cannot be moved between organizations this is why the OrganizationId is not part of the FarmPlotEditDto which is already written for the AI model to use. This code won't even compile. All of them also decided to copy a canEdit check from the get method in the same class which is not needed here because we already checked for that permission.

The Claude models did better because they properly returned null instead of throwing an exception of a type that is not relevant here and also included proper soft delete check which includes the Organization and knew (probably by looking at the Create method present in the class) to check for FarmEnabled. They are clearly better than the OpenAI models but still useless as I would have done the task by hand faster. None of the models included the Trim() call.

I had a conversation with Claude 3.7 Sonnet supposedly the smartest one and asked it to follow the pattern in ApplicationService precisely. It failed again. I asked it if the UpdateAsync in ApplicationService starts with ThrowIfCannotEditAsync, it said "no" and I asked it why its code starts with it. It fixed that part of the code at least and knew to include the CanRead check but everything else that was broken was still there.

Somehow these models can build a 3D city simulation and what not but can't do the simplest real world task in an existing codebase. And here I was hoping to retire and have the AI work for me... well, back to work I guess...

Update 27.04.2025

So a lot of people were telling me my problem is that I am not using Cursor. I think this is bullshit as Copilot can use Claude 3.7 Sonnet which is what Cursor uses but decided to prove them wrong. So I downloaded Cursor, used it for a couple of hours just to make sure it has warmed up in my codebase. I then deleted the existing code in Cursor (meaning that Cursor saw it, not sure if this matters) and asked (almost) the same prompt. To my surprise Cursor spit out basically perfect method. I was shocked.

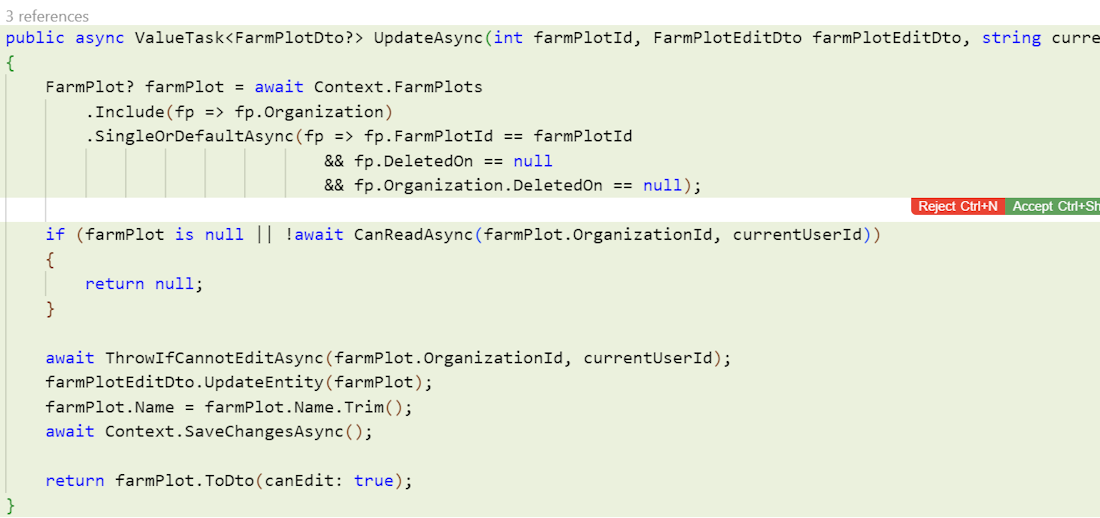

Claude 3.7 Sonnet in Cursor:

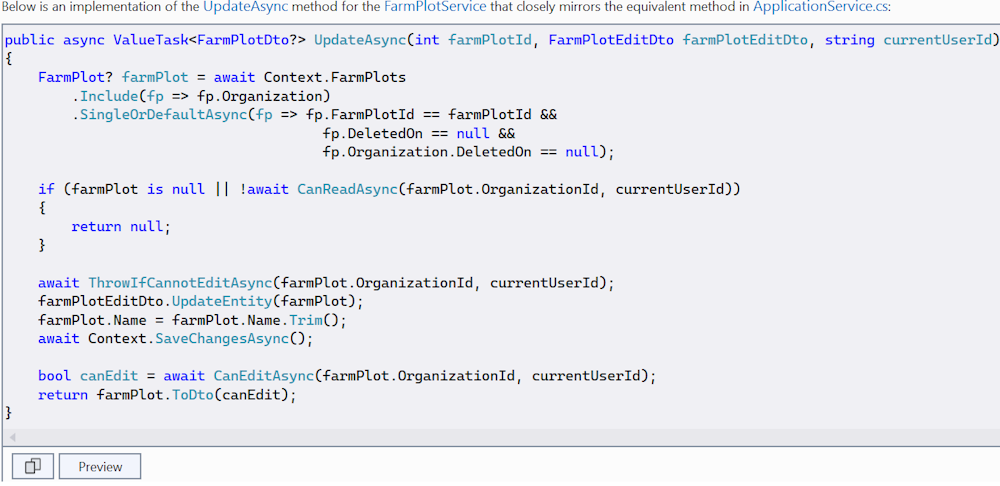

How can this be? So my prompt was "Write an UpdateAsync method for @FarmPlotService.cs FarmPlotService.cs equivalent to UpdateAsync method in @ApplicationService.cs". Because I didn't know how to reference my workspace in Cursor I gave the AI less context. I immediately tried the same thing in Visual Studio with GitHub Copilot and the result was almost the same except that it added some Includes on the query. At first I thought the includes were useless but then I remembered that I changed the feature since I wrote the article. These includes were actually needed. I think the better result happens because Copilot still found and referenced more context like for example the unit tests for the FarmPlotService class but not nearly as much as @workspace gives it. To my surprise more context you give the AI does not make the result better. In this task smaller was better but for the best result it needed a bit more than the smallest context. I was too lazy to try all the AIs but I tried o1 and o3 mini and they did worse than Claude 3.7 Sonnet but still better (better than Claude 3.7 did on my previous experiment with @workspace as context). They added an unneeded can edit check at the end of the method. Both models wrote the same code (minus some formatting differences) but o3 was much faster (o1 was still faster than Claude 3.7 Sonnet).

o3 mini with reduced context:

I am still not happy with the result. While the resulting method was great it took more time than I would have copy/pasted it by hand, but that's not the issue, the issue is that given access to the workspace the models were not good enough at figuring which context is relevant so I have to be very precise with that. As far as I understand they look at your files and try to decide which of them might be relevant and include them in the prompt context and apparently they are not doing very good job. In addition I believe bigger tasks that require more context will cause more problems. I will look for an opportunity to experiment further, maybe give it a trivial feature like that but more complete, one that goes through all the layers of the application.

As scientists who publish papers like to write: More research is needed.